The method of close-reading runaway slave advertisements between 1835 and 1865 allows for an exploration of whether patterns of listed locations differ between the states, specifically in relationship to how Texas trends might differ. Various newspapers from Mississippi, Texas, and Arkansas provide the data set for this analysis. Trends are most easily analyzed by individual state, followed by a conversation and comparison of these overall trends between the states.

Spanning the years 1835 to 1865, the pattern of Arkansas’s runaway slave ads shifts with its relative position to other states. A territory until it reached statehood in 1836, Arkansas was the borderland of the United States for the earlier years between 1835 and 1865. Texas declared independence in 1836 and maintained its autonomy from the United States until 1845. Arkansas, then, was essentially a western borderland. The passage of the Mississippi River through Arkansas also allowed slaves the opportunity to escape by boat, as the mulatto Billy attempted when fleeing from New Orleans (AR_18360526_Helena-Constitutional-Journal_18360526).

Many jailer notices in Arkansas advertise captured slaves from more eastern states, indicating that Arkansas was a popular destination or point on the route to freedom. For example, in 1836 two slaves Jacob and Jupiter say “that they Belong to H. B. JOHNSON, residing in Yazoo county, Mississippi” (AR_18360705_Arkansas-Gazette_18360409). Similarly, the captured “Negro man” Henry claims his home is in Memphis, Tennessee, with a Mr. Staples (AR_18551123_Democratic-Star_18551123). Numerous other examples also support this trend.

In addition, many slaveowners from other states advertised for their runaways in Arkansas, indicating that they considered Arkansas a likely location for their runaways. George and James of Mississippi are advertised for in the 1838 Arkansas Gazette, in addition to re-publication of the advertisement in the Memphis Enquirer and Little Rock Gazette (AR_18380314_Arkansas-Gazette_18371002).

Although the westward movement seemed to be generally assumed among slaveowners, a handful considered family ties stronger, such as Martin Miller of Fayetteville, Texas, who advertised for his slave in the Arkansas Gazette: “Said Negro was brought from Georgia, and is probably making his way back to that State” (AR_18360909_Arkansas-Gazette_18360727).

With the passage of time, these trends shifted. Arkansas lost its “borderland” status to Texas. With these changes came a change in the fugitive slave advertisements. The number of runaways from Arkansas increased, probably due to a rise in population. The number of jailer’s notices advertising slaves who claimed to be from other states also increased, however, suggesting that Arkansas still served as a way station for slaves on their journeys to Texas or Mexico.

Despite the projection of locations onto their runaways, slaveowners acknowledged that these assumptions were just that – merely assumptions. An 1836 ad from the Arkansas Gazette states “I have dreamed, with both eyes open, that he went toward the Spanish county; but as dreams are like some would be thought honest men―quite uncertain―he may have gone some other directions.” Although most fugitive slave advertisements were slightly less flowery in their language, the inaccuracies of projected direction were subtly acknowledged in the advertisements.

Mississippi ads tend to be both jailer’s notices and runaways ads of and for slaves from Mississippi. This trend suggests that Mississippi, unlike Arkansas, was a more stable slave economy and not as frequently a destination for slaves.

Texas, the focus of this research, offers data from the Texas Telegraph and the Texas Gazette. William Dean Carrigan, in his article “Slavery on the frontier: the peculiar institution in central Texas” sets Texas up as “a world torn in three directions by four different cultures.” The Native American tribes and the Mexican border both helped to define Texas as a borderland. How this exhibited itself through the runaways, however, is still contested. Campbell states that runaways tended to head toward either Mexico (for freedom) or toward the east (to rejoin relatives that they had been separated from) but does not indicate which was more prevalent.

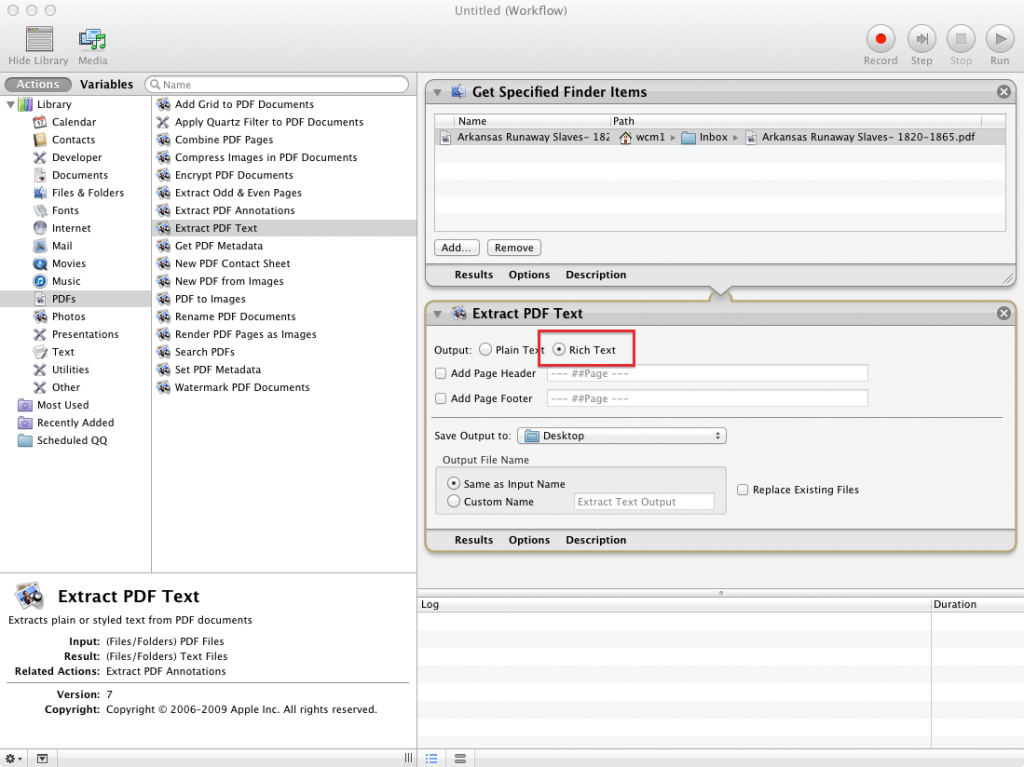

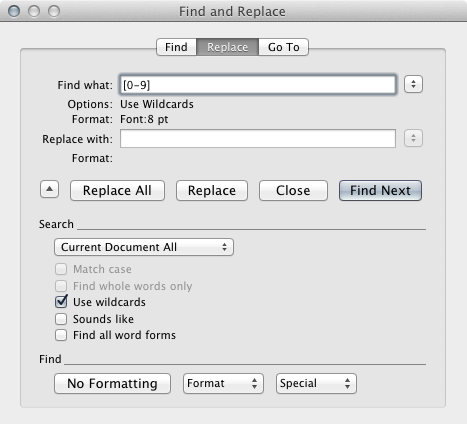

The extensive size of the data set results in certain implications based on the time-consuming and labor-intensive nature of the manual labor of close reading. When analyzing the data by the human eye, pre-conceived assumptions come into play, and unexpected results are less likely to be found if present. In digital analysis, however, unique results can be reached more easily through an unbiased re-organization of the data. Without digital tools to sift through the information and help identify patterns, the presence of human error in evaluating the advertisement trends is more likely to be present, especially based around expectations. Focusing on multiple elements or the connections between them is also more difficult. For example, perhaps there exists a correlation between the amount of the reward and the projected location of the slave or distance between the locations of the advertisement and the owner. Without the extremely labor-intensive process of creating a spreadsheet, this evidence is difficult to analyze. Specific locations (cities and plantations) fall to the generalization and recognizability of states and counties. With over 1000 advertisements in the Mississippi corpora alone, analysis and trends are very difficult to find in a short period of time.

Based on these observations, the borderland status of states does change the location trends present in runaway slave advertisements. The advantages of digital tools, however, will help us analyze these conclusions to evaluate the correlation between digital tools and close-reading, as well as possibly reveal unexpected patterns in the data set.