Not much research has been done on slavery in Texas. John Hope Franklin and Loren Schweninger’s Runaway Slaves: Rebels on the Plantation, one of the most comprehensive projects on runaway slaves in the South, does not even include Texas in the data or analysis, but rather implies that slavery seems to be relatively universal throughout the South. Randolph B. Campbell opened the discussion of slavery in Texas through his book An Empire for Slavery: The Peculiar Institution in Texas, 1821-1865, but agreed with Franklin and Schweninger on the similarities across the country. William Dean Carrigan, however, took another position in the chapter on Texas in his book Slavery and Abolition: he argued that slavery in Texas (specifically in central Texas) was unique from that in other Southern states. However, the lack of information on the topic indicates the need for additional research in order to reach a more definitive conclusion.

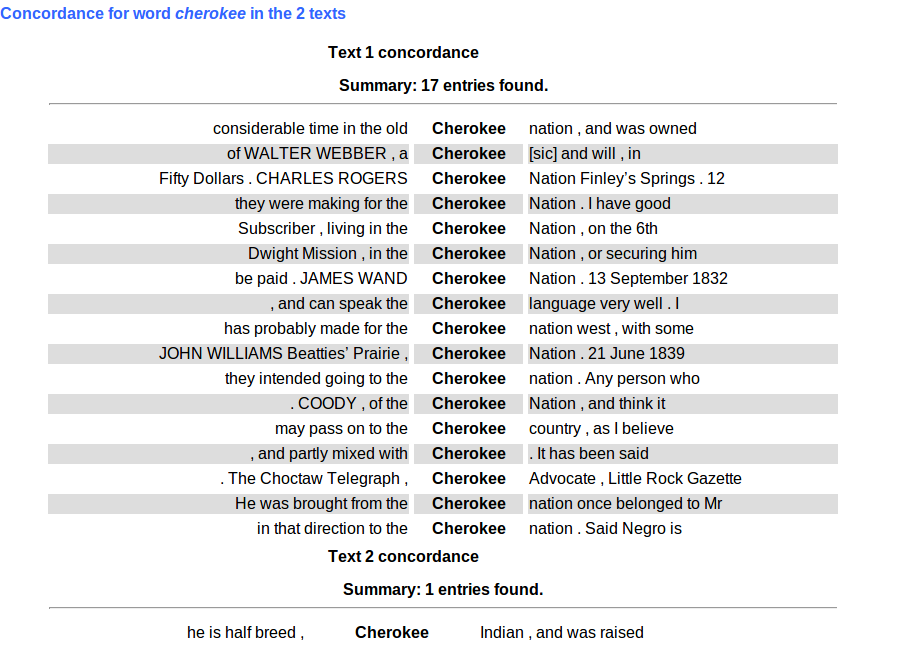

Why would Texas be different from other states? Since Texas was the frontier of plantation agriculture, many diverse groups interacted with the slaveholders and their slaves. Mexicans (to the south) and Indians (to the north and west) increased owner fears and possibly runaway occurrences as well. The proximity of Mexico and the absence of a fugitive slave law there made it a more desirable runaway location than the North, which was still impacted by fugitive slave laws. The presence of Indian tribes just on the outskirts of the plantation culture provided another possible refuge for runaways. Although not all Indians were friendly to runaway slaves and although the proximity of Mexico did not necessarily result in increased runaway occurrences, both of these factors could have contributed to the culture of slavery in Texas. In addition the lower population density and wooded terrain of central Texas were possible advantages for runaways.

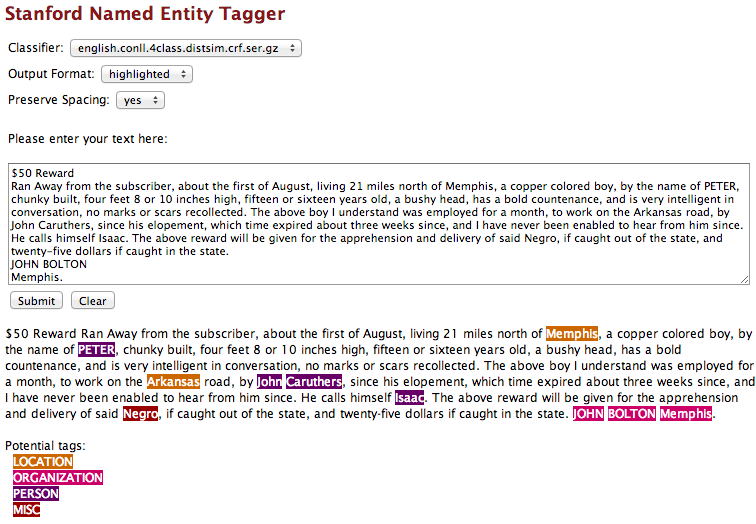

These factors not only framed the diversity of options available to runaways but also impacted slaveholders’ perceptions of their slaves. How did slaveholders react to the many runaway possibilities? Did they treat or perceive their slaves differently? Or were Texas slaveholders essentially the same as slaveholders in any other state? Runaway slave advertisements allow a glimpse into these perspectives through the language they use to describe the slaves. These advertisements were prevalent throughout the South prior to the Civil War, and are thus an important historical resource for historians. Our project will compare Texas advertisements (from the Houston Telegraph) with those from other states in order to contribute toward a more comprehensive view on slavery in Texas.

In order to accomplish this, we will utilize various digital tools. The term “digital history” addresses two different perspectives: using digital tools to discover new information and using the digital to present those findings. By exploring different methodologies, we may be able to benefit historians as a whole by contributing to future ways of working with data. In addition, we are interested in the digital presentation of history: what are the various benefits and disadvantages of each method? The basic essay format is only one of many ways of presenting information, and other genres provide unique perspectives on the same argument. These explorations will contribute to both the historical and the methodological in the context of Texas runaway slaves and the digital humanities, allowing our research to stretch beyond the specific into future possibilities of genre and method.