Some of you expressed an interest in being able to quickly count all the ads in a folder and determine how many were published in a given year, decade, or month (to detect seasonal patterns across the year).

Here is a script that can do that. It is designed to work on Mac or Linux systems.

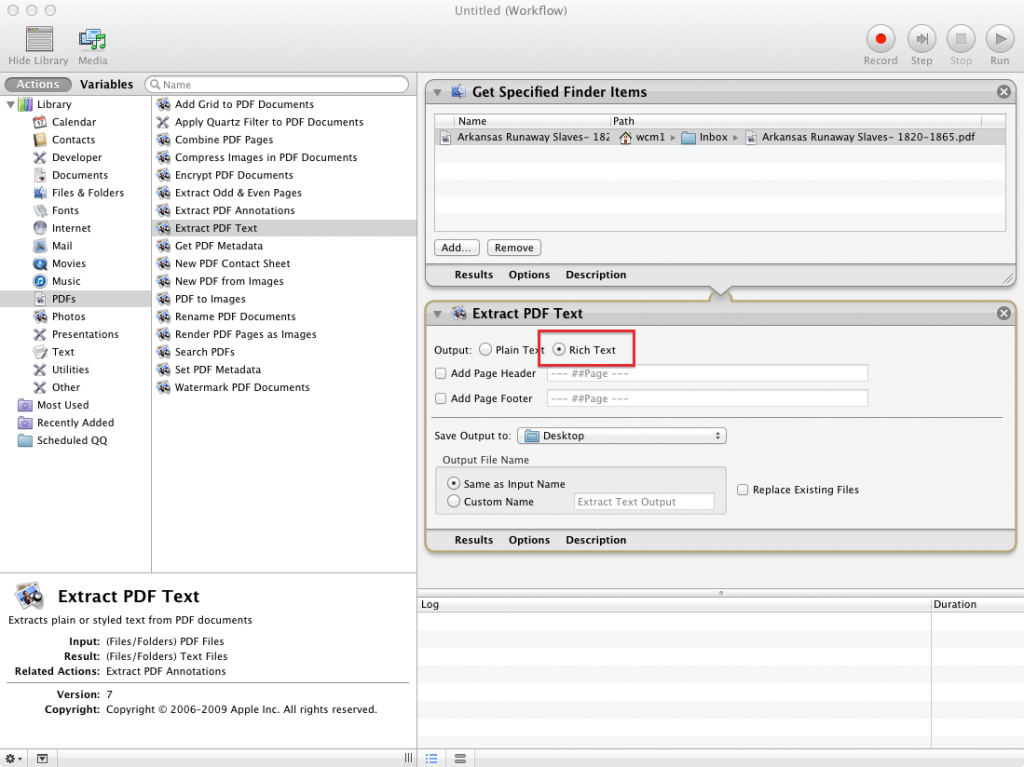

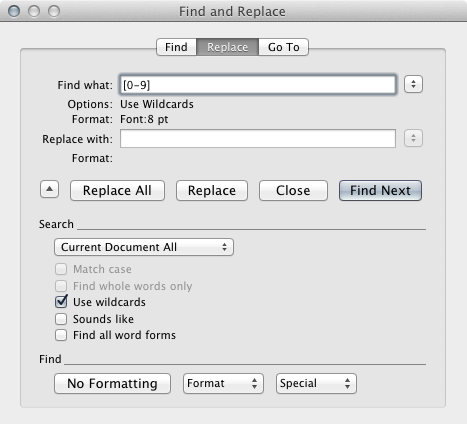

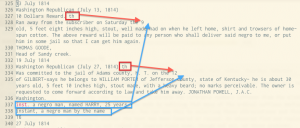

To use it, you should first download our adparsers repo by clicking on the "Download Zip" button on this page:

Unzip the downloaded file, and you should then have a directory that contains (among other things) the countads.sh script.

You should now copy the file to the directory that contains the ads you want to count. You can do this the drag-and-drop way, or you can use your terminal and the cp command. (If you forgot what that command does, revisit the Command Line bootcamp that was part of the MALLET homework. Once the script is in the directory, navigate to that directory in your terminal, and then run the command like this:

./countads.shIf you get an error message, you may need to follow the instructions in the comments at the start of the script (which you can read on GitHub) to change the permissions. But if all goes well, you’ll see a printed breakdown of chronological counts. For example, when I run the script in the directory containing all our Mississippi ads, the script returns this:

TOTAL 1632

DEC ADS

1830s 1118

1840s 178

1850s 133

1860s 4

YEAR ADS

1830 30

1831 54

1832 87

1833 68

1834 143

1835 157

1836 262

1837 226

1838 63

1839 28

1840 16

1841 16

1842 22

1843 33

1844 44

1845 25

1846 14

1847 1

1848 5

1849 2

1850 11

1851 17

1852 19

1853 15

1854 7

1855 9

1856 11

1857 23

1858 13

1859 8

1860 4

MONTH ADS

1 100

2 89

3 103

4 130

5 160

6 161

7 188

8 150

9 150

10 149

11 146

12 86If you choose, you can also "redirect" this output to a file, like this:

./countads.sh > filename.txtNow you should be able to open filename.txt (which you can name whatever you want) in Microsoft Excel, and you’ll have a spreadsheet with all the numbers.

The script may seem to have limited value, but the key to its utility lies in first getting an interesting set of ads into a directory. That extends its usefulness. For example, if you wanted only to know the month distribution of ads in a particular year, you could first move all the ads from that year into a directory, and run the script from within it. You’d get lots of zeroes for all the years that you’re not interested in, but you would get the month breakdown that you are interested in. Depending on which ads you put in the directory that you are counting in, you can get a lot of useful data that can then be graphed or added into further calculations.