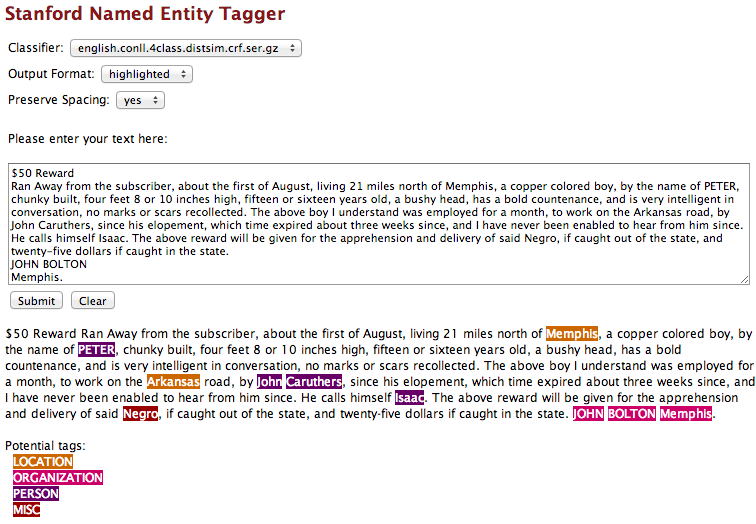

You may have noticed that I was able to put a pretty clean ZIP file of Arkansas ads into our private repository. As you know, we’ve had some difficulties copying and pasting text from the wonderful PDFs posted by the Documenting Runaway Slaves project: namely, copying and pasting from the PDF into a text file results in footnotes and page numbers being mixed in with the text. Funny things also happen when there are superscript characters. This makes it difficult for us to do the kinds of text mining and Named Entity Recognition that we’re most interested in. But in this post I’ll quickly share how I dealt with these difficulties.

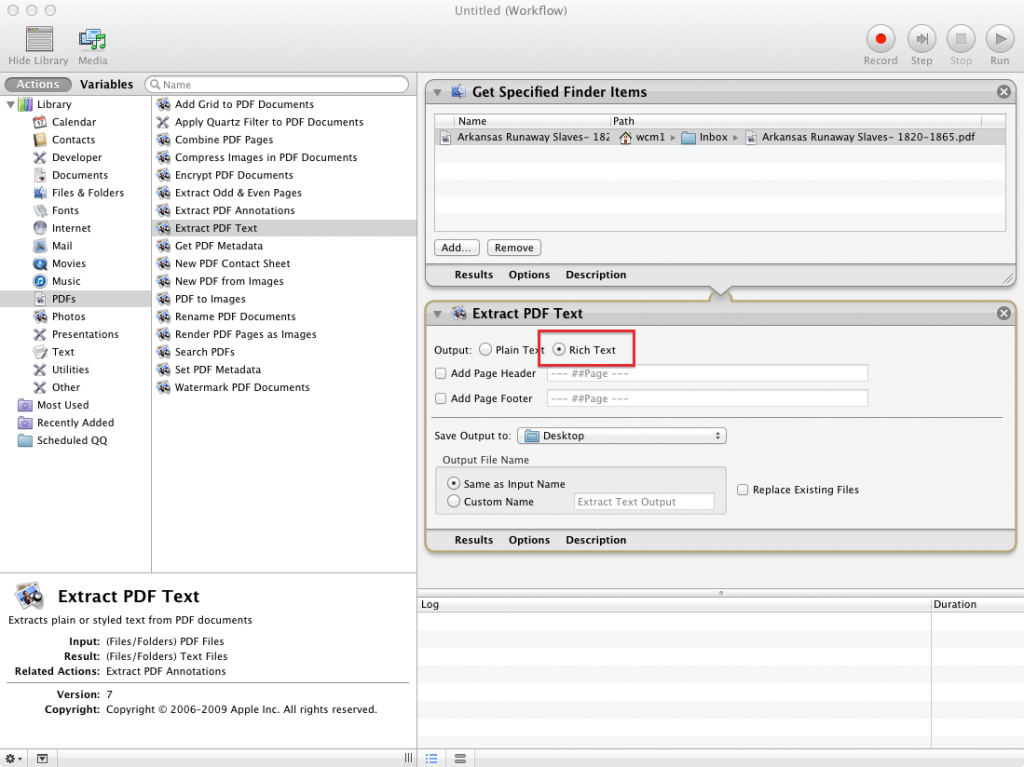

The key first step was provided by this tutorial on using the Automator program bundled with most Mac computers to extract Rich Text from PDFs. The workflow I created looked like this:

Extracting the text as "Rich Text" was the key. Running this workflow put an RTF file on my desktop that I then opened in Microsoft Word, which (I must now grudgingly admit) has some very useful features for a job like this. When I opened the file, for example, I noticed that all of the footnote text was a certain font size. I then used Word’s find and replace formatted text function to find and eliminate all text of that font size.

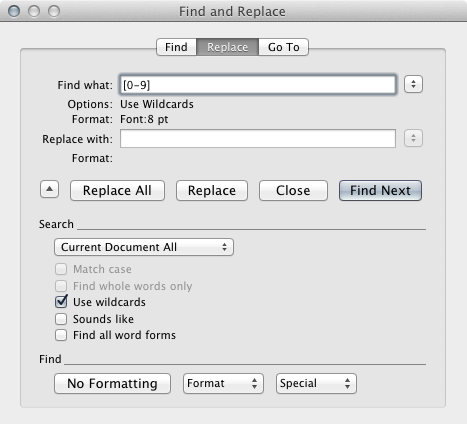

I used a similar technique to get rid of all the footnote reference numbers in the text, but in this case I had to be more specific because some of the text I wanted to preserve (like superscript "th," "st, and "nd" for ordinal numbers like "4th," "1st," and "2nd") was the same font size as the footnote markers. So I used Word’s native version of regular expressions (called wildcards) to find only numbers of that font size. In other words, the "Advanced Find and Replace" dialogue I used looked like this:

I used the same technique to eliminate the reference numbers leftover from the eliminated footnotes, which were all of an even smaller font size. Similar adjustments can be made by noticing that many of the ordinal suffixes mentioned earlier ("th," "st," and "nd") are "raised" or "lowered" by a certain number of points. You can see this by selecting those abbreviations and then opening the Font window in Word. Clicking on the "Advanced" tab will reveal whether the text has been lowered or raised. An advanced find and replace to change all text raised or lowered by specific points with text that is not raised or lowered fixed some, though not all, of these problems.

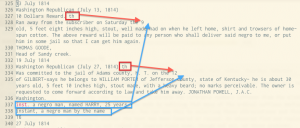

At this point I reached the limit of what I could do with the formatting find and replace features in Word, so I saved my document as a Plain Text file (with the UTF-8 encoding option checked to make things easier later on our Python parsing script), and then opened it up in a text editor. At this point I noticed that there were still some problems (though not as many!) in the text:

The main problem seems to arise in cases where there was a superscript ordinal suffix in the first line of an ad. As you can see, the "th" ends up getting booted up to the first line, and the remainder of the line gets booted down to the bottom of the page. Fortunately, there seems to be some pattern to this madness, a pattern susceptible to regular expressions. I also noticed that the orphaned line fragments following ordinals seem to always be moved to the bottom of the "page" right before the page number (in this case "16"). This made it possible to do a regex search for any lines ending in "th" (or "st" or "nd") followed by another line ending in a number, followed by a replacement that moves the suffix to where it should be. Though it took a while to manually confirm each of these replacements (I was worried about inadvertently destroying text), it wasn’t too hard to do.

A second regex search for page numbers allowed me to find all of the orphan fragments and manually move them to the lines where they should be (checking the master file from DRS in cases where it wasn’t clear which ad each fragment went with). The final step (which we already learned how to do in class) was to use a regular expression to remove all the year headers and page numbers from the file, as well as any blank lines. Franco’s drsparser script did the rest of the work of bursting the text file into individual ads and named the files using the provided metadata.