If you prefer, you can download these instructions in PDF form.

Runaway slave advertisements from nineteenth-century Texas appeared in newspapers that have been digitized. That is, they have, like all digital representations of analog sources, been partially digitized. The Portal to Texas History at the University of North Texas contains full-page images of many nineteenth-century newspapers, together with metadata about the newspapers themselves and OCR text for each newspaper page that makes it possible to search for text.

But these newspapers have not been digitized so as to provide metadata or descriptions at the level of individual articles. That means, to paraphrase Daniel J. Cohen and Roy Rosenzweig, part of the information visible to the eye (i.e., information about when a new article or ad begins and ends) has been lost (or at least not digitized) in the process of the newspaper’s "becoming digital."

This presents a problem for researchers, like us, who are interested in a particular kind of article—runaway slave advertisements. In this homework assignment, you will engage in the practice of digitization by looking through page images from one year of the Telegraph and Texas Register, identifying advertisements pertaining to runaway slaves, and inputting some basic metadata about the ad into the collaborative spreadsheet that you used in Homework #1. In the process you will also learn to pay attention to the "interface" of a search database and gather new information about how acts of resistance or flight by enslaved people were represented in primary sources.

Objectives

- To gain familiarity with how one major digitization project has decided to produce and share digital objects.

- To generate new questions about the kinds of information contained in runaway slave advertisements and how they changed over time.

- To help complete a complete database of runaway slave advertistements found in one of Texas’s longest-running nineteenth-century newspapers.

Before You Begin

This homework assignment will require you to spend time using the Portal to Texas History, so begin by watching this introductory video about the project:

Also spend some time browsing the site and looking through the help guide for the site, particularly the one on using newspapers. Think about how the site is organized and what sorts of searching and browsing are possible (or not possible) with the user interface provided. Run a few searches about something that interests you, and click through on the results. Get a feel for how the site "works," spending at least 10 minutes on this before proceeding.

Now head over to this lesson from the Programming Historian website about downloading records. You’re not actually going to be "programming" for this assignment or doing any downloading; you should only read the first three major sections of this lesson: "Applying Our Historical Knowledge," "The Advanced Search on OBO," and "Understanding URL Queries." These sections give you a tour through the Old Bailey Online, whose search query interface is broadly similar to the Portal to Texas History. Pay particular attention to what the lesson shows you about "query strings." Then go back to the Portal and run some more searches, noting how the values in the URL query strings change as you navigate through the site or run different searches.

Now you are ready to proceed to the homework assignment.

Finding Ads

Step 1: Find Newspaper Issues

Each of you will receive an email from me assigning you a year (or the equivalent number of issues) from the Telegraph and Texas Register that you will be responsible for reading in search of runaway slave advertisements.

Your first task is to figure out how to perform a search (or modify a search URL) so as to pull up (in "date ascending" order) all of the issues from the newspaper in your time period.

Here’s an example of what such a "Search Results" page looks like for the 1843 volume of the Register:

Search Results showing all available issues from 1843

Once you have a page that you believe shows the first page of all the results from a search for issues of the newspaper in your assigned timeframe, tweet that URL directly to me @wcaleb with the course hashtag so that I can check the URL and make sure you have found all the relevant issues. I have to approve this URL by a reply tweet before you can continue.

Step 2: Find Runaway Ads

Now you will be ready to go into each issue and look for ads. You’ll click through to the "Read this Newspaper" tab of each issue, and then click on "Zoom (Full Page)" so that you can magnify the image. Use the arrow pages at the top to flip through the various pages (or sequences) of the issue. Even though the ads are most likely to appear on pages 3 and 4, make sure that you at least run your eyes over every inch of every issue.

This will take time so start early. I recommend that you time how long it takes you to get through one or two issues following the steps below, so that you can plan your schedule accordingly.

The ads will come in different formats, and may have very different amounts of information. Some of the ads will be posted by subscribers who are seeking to find a slave who has runaway. Other ads will be posted by sheriffs or others who have captured a slave and are seeking the legal owner. If it looks like a runaway slave ad to you, or just looks like it has to do with runaway ads (e.g., a notice from the newspaper about how to submit a runaway ad, or an item about the different graphics used in ads), you should go ahead and enter it into the spreadsheet. Right now we just want to identify items of interest, so better to cast a wide net than a narrow one!

Once you’ve found an ad, you’ll need to enter it on the Google spreadsheet of runaway ads already collected. Be sure to carefully follow these instructions when you enter:

- Before you start entering, find the appropriate "sheet" by looking at the tabs at the bottom of the window. Each year has its own "sheet" or tab, so find the one that belongs to your year.

- As shown in the labels at the top of the sheet, you should list the year, month (as a number) and day (as a number) of the issue on each row.

- Also include the full citation (which you can copy and paste from the top of zoomed page at the Portal to Texas History):

Full Cite Information for the Issue, as seen on Zoomed Page

- To generate a "permalink" URL that can be copied into the permalink column, first use the "Zoom" feature to enlarge the ad and center it in your browser’s viewing window. Make it as large as you can while still keeping all of the ad within view. Then click on the "Permalink" button while zoomed in on the ad.

Permalink button

- If you think you recognize the ad as one you have seen before in a previous issue, identify it as a reprint by placing an asterisk in the final labeled column. You can use the other blank columns to the right to make any helpful notes to yourself (for example, by noting the names as a way of helping you to remember which might be reprints).

Finally, if you go through an entire issue of the paper and find no runaway ads, make a single row that indicates the date of the issue, and then type "None" in the "Full Cite" column.

Step 3: Reflect on Findings

After you have finished looking through all of your assigned issues, return to the JSON gist that you submitted for your first assignment and notice what pieces of information you found significant about the three ads you looked at then. In the process of going through your year of newspapers, did you notice new kinds of information that you had not seen before? Are there name/value pairs you would add to your JSON if you were doing it again? Was there anything about the newspapers (either in the ads or in the surrounding material) that surprised or interested you?

For the final step in this assignment, leave a comment on this blog post answering at least one of these boldfaced questions. You may use a non-identifying pseudonym as you make your comment, so long as you let me know which pseudonym you used.

Summary

To recap, successful completion of this homework requires:

- A tweet to me, with the course hashtag and the URL to the first page of search results from Portal to Texas History containing all the available issues of the Telegraph and Texas Register in your assigned time period.

- A completed tab in the Google spreadsheet that documents all of the ads contained in the paper in the time you were assigned.

- A brief comment reflecting on what you saw in the newspaper.

Points will be deducted from the assignment if the above technical requirements are not met or if the work contains numerous typographical errors, as well as for blog comments that do not seriously engage with the questions asked and reflect a thoughtful encounter with the newspapers you saw.

As in the first homework assignment, you can always take to Twitter if you need help, but in keeping with the academic integrity policies for the course, do not get someone else to do the work for you and be sure to acknowledge any pointers or technical assistance you received—in this case by noting it in your blog post comment.

Recent Pins

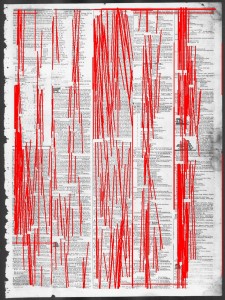

Recent Pins A typical image looked something like this.

A typical image looked something like this. After running the script on several images, I ended up with ~1600 negative images to use in training the cascade classifier. I supplemented that with some manually-cropped pics of common icons such as the one that appears to the left.

After running the script on several images, I ended up with ~1600 negative images to use in training the cascade classifier. I supplemented that with some manually-cropped pics of common icons such as the one that appears to the left.