These last few days, Franco and I have been developing a way to detect runaway slave ads in images of 19th centuries newspapers. The Portal to Texas History has digitized copies of thousands of issues of Texas newspapers and is a source waiting to be explored for runaway slave ads. For example, a search for “runaway negro” in the full-text (OCR transcriptions) of their collection yields 7,159(!) results. Clearly, that number is too high to accommodate manual perusal of all possible matches.

Thus, we have been thinking about ways to automate the process. Under the suggestion of Dr. McDaniel, we decided to use OpenCV, a popular open source computer vision library, to conduct object recognition for the classic runaway slave icon. You know, this one:

(In newspapers, from what I have seen, it usually appeared much smaller and simplified, as shown here).

OpenCV has a tool called Cascade Classifier Training that builds an XML file that can be used to detect objects. It requires a set of positive samples, images that contain the chosen object, and negative samples, images that do not contain the object but are of similar context. It works best with a large dataset of positive samples, and to generate that it provides a function called “createsamples” that takes an image and applies transformations to it, such as adjustments in intensity, rotations, color inversions, and more to make altered versions. Once the cascade has been trained, it can be used to efficiently detect and locate the desired object in other images.

So, the first order of business in preparing to do object recognition was to collect a set of runaway slave icons. I downloaded ~35 newspaper page images containing the icon and cropped the images to only contain the icon visible. The tutorials [1, 2, 3 ..others] I read suggested that for best results the positive images (images of the object to be detected) should all be the same aspect ratio. For simplicity, I made sure all my images were 60x64px.

Next I generated a set of negative (background) images that were from newspaper images that did not have the runaway icon. These had to be the same size as the positive images. I read that a large data set was especially needed for the negatives, so I wrote a simple script to crop newspaper page images into a series of individual 60×64 pics. For anyone curious, here’s a gist of the code.  A typical image looked something like this.

A typical image looked something like this.

After running the script on several images, I ended up with ~1600 negative images to use in training the cascade classifier. I supplemented that with some manually-cropped pics of common icons such as the one that appears to the left.

After running the script on several images, I ended up with ~1600 negative images to use in training the cascade classifier. I supplemented that with some manually-cropped pics of common icons such as the one that appears to the left.

Next I used the find command in terminal to output text files containing a list of all the positive and all the negative images. Then, I created the “sample,” a binary file that contains all the positive images that is required by the cascade trainer (opencv_traincascade). Like I mentioned, usually in creating the sample, transforming settings are specified to multiply the amount of data available to train the cascade. I figured that the runaway icon would always appear upright, and I made sure my positive images set contained icons of varying clarity, so I just ran opencv_createsamples without any distortions.

Finally, I had all I needed to train the cascade. I ran the following command in Terminal:

opencv_traincascade -data classifier -vec samples/samples.vec -bg negatives.txt -numStages 6 -minHitRate 0.95 -numPos 27 -numNeg 1613 -w 60 -h 64 -precalcValBufSize 512 -precalcIdxBufSize 256

Opencv_traincascade is the program to be run. The value for data is the name of the folder to store the resulting cascade file in. The value for vec is the path to the samples vector file. The value for bg is the name of the file containing paths to each negative image. numStages I am not entirely sure so I just picked 6 since I didn’t want the training to run for days as others have experienced. minHitRate dictates the accuracy. numPos I still don’t quite understand, but I chose ~80% of the number of positive images to ensure no errors would result. numNeg is the number of negative images. Then there’s width, height, and some settings specifying how much RAM the program can hog up.

I had high hopes, but after 30 minutes of fans-blaring CPU use the program quit with the error, “Required leaf false alarm rate achieved. Branch training terminated.” I need to do more research to figure out why it didn’t work, but an initial search told me that the number of positive samples I used may not be enough. Joy..

Next Steps:

- Play around with OpenCV some more to try to get a functional cascade. Maybe enlist the help of stackoverflow or reddit.

- Rethink whether object recognition is the best way to maximize runaway slave ad discovery. While a lot of ads did use the icon, perhaps a larger number did not. For newspapers with digital transcriptions, text-based analysis would surely provide better results.

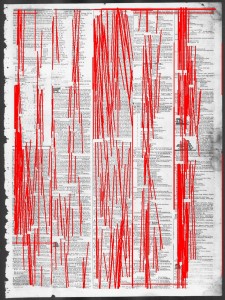

- If we can’t get a working cascade to do object recognition, revisit newspaper decomposition. Franco and I tried using Hough Line Transforms through OpenCV to detect lines separating newspaper articles, but to no avail. Its promise is marked up images like the Sudoku board shown below. To the right of it is our “success.” The theory is that if we could detect the dividing lines in newspapers, we could crop the pages into individual articles, run OCR on each article, and then do text-analysis to discover runaway ads. It is no easy feat, though, as these [1, 2] research articles demonstrate.

I was able to improve our results by limiting detected lines to those with approximately horizontal or vertical slopes, since those are the only ones we are interested in newspapers, but it is clear we need to tweak the script or enlist a better system.

Sudoku hough line transform

3 Responses to Discovering Runaway Slave Ads